Continuous Monitoring - Best Practices

Today, I want to share some valuable insights on continuous monitoring best practices in machine learning models to effectively tackle drift. Drift, the deviation of model performance over time, can be a significant challenge when deploying ML models in production. By implementing these practices and leveraging code examples, you can ensure the robustness and reliability of your ML systems. Let's dive in!

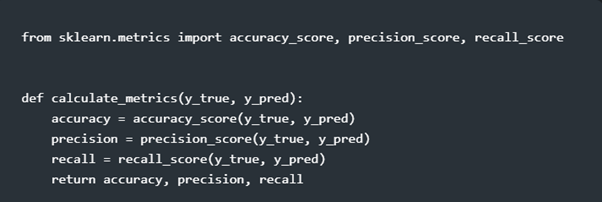

Define Relevant Metrics: Start by identifying the key performance indicators (KPIs) for your model. These could include accuracy, precision, recall, F1 score, or any other metrics suitable for your specific use case. Please discuss business metrics with stakeholders before deciding which one to use. This is key to avoid any back and forth. Incorporate these metrics into your monitoring pipeline to evaluate model performance regularly.

Example code in Python using scikit-learn:

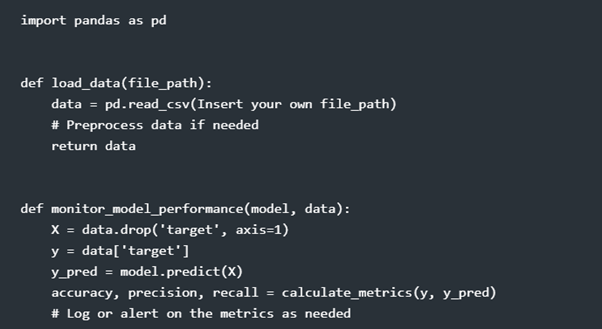

Establish a Monitoring Schedule: Set up a regular monitoring schedule to track model performance. Determine the frequency at which you will evaluate your model, considering factors such as data volume, expected drift rate, and the criticality of the system. Ideally, monitoring should be performed in real-time or at predefined intervals.

Example code in Python using scikit-learn and pandas:

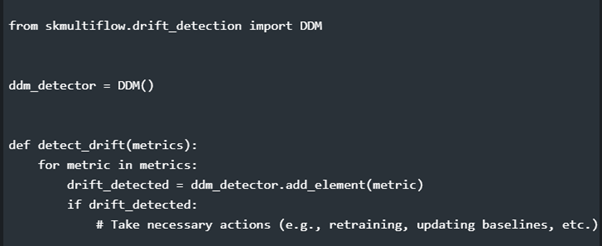

Detect Drift: Implement drift detection mechanisms to identify when model performance deviates significantly from the expected behavior. Statistical methods like the Kolmogorov-Smirnov test, the Drift Detection Method (DDM), or the Page-Hinkley test can be utilized to detect drift. Compare current metrics against a baseline or historical data to determine the extent of the drift.

Note: again, this is standard. Next articles we will go more in details.

Example code in Python using scikit-multiflow:

Adapt and Update: When drift is detected, take appropriate actions to adapt your ML model to the new data patterns. This may involve retraining the model, updating feature engineering processes, adjusting hyperparameters, or even collecting new data (next articles we will see how to create queuing systems for new data to be crunched). Regularly iterate and refine your ML system to maintain optimal performance.

Continuous monitoring is key to ensuring the long-term success of machine learning models. By defining relevant metrics, establishing a monitoring schedule, detecting drift, and adapting accordingly, we can effectively address drift and maintain the performance of our models. Let's stay vigilant and proactive in our ML deployments!

If you're interested in learning more about this topic or have any insights to share, feel free to connect with me. Let's empower each other in the exciting world of machine learning!

#MachineLearning #ContinuousMonitoring #DriftDetection #DataScience #LinkedInPost

Giancarlo Cobino

Speak to Qvantia today, we would be very happy to help - info@qvantia.com